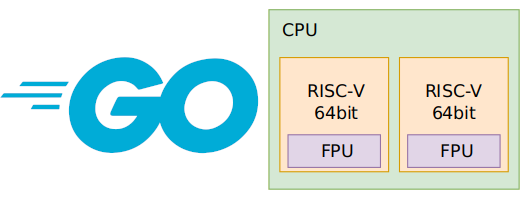

Playing with Go schedulers on a dual-core RISC-V

You can port the Go runtime to a system that doesn’t implement threads. An example would be the current WebAssembly port.

func newosproc(mp *m) {

panic("newosproc: not implemented")

}

But if you want to run a bare metal Go program on multiple cores the thread abstraction is a must, unless you are ready to implement a completely new goroutine scheduler.

The goroutine scheduler uses operating system threads as workhorses to execute its goroutines. The goal is to efficiently run thousands of goroutines using only a few OS threads. Threads are considered expensive. There is also much cheaper to access shared resources by multiple goroutines that run on the same thread. This is further optimized in Go by introducing the concept of a logical processor (called P) that can “execute” only one thread at a time and has local cache of the most commonly used resources. There can be unlimited number of threads sleeping in the system calls but they aren’t assigned to any P.

You can set a number of logical processors using GOMAXPROCS environment variable or runtime.GOMAXPROCS function. The default GOMAXPROCS for Kendryte K210 is 2.

Tasker

The Embedded Go implements a thread scheduler for GOOS=noos called tasker. Tasker was designed from the very beginning as a multi-core scheduler but the first multicore tests and bug fixes were done on K210 while working on noos/riscv64 port.

Tasker is tightly coupled to the goroutine scheduler. It doesn’t have it’s own representation of thread. Instead it directly uses the m structs. The Go logical processor concept is taken seriously. Any available P is associated with a specific CPU using the following simple formula:

cpuid = P.id % len(allcpus)

CPU is the name used by the tasker for any independent hardware thread of execution, a hart in the RISC-V nomenclature.

The tasker threads are cheap.

Print hartid

Let’s start playing with Go schedulers and two cores available in K210. The basic tool we need is a function that returns the current hart id which in case of K210 means the core id.

package main

import _ "github.com/embeddedgo/kendryte/devboard/maixbit/board/init"

func hartid() int

func main() {

for {

print(hartid())

}

}

As you can see the hartid function has no body. To define it we have to reach for Go assembly.

#include "textflag.h"

#define CSRR(CSR,RD) WORD $(0x2073 + RD<<7 + CSR<<20)

#define mhartid 0xF14

#define s0 8

// func hartid() int

TEXT ·hartid(SB),NOSPLIT|NOFRAME,$0

CSRR (mhartid, s0)

MOV S0, ret+0(FP)

RET

The Go assembler doesn’t recognize privileged instructions so we used macros to implement the CSRR instruction.

Let’s use GDB+OpenOCD to load and run the compiled program. I recommend using the modified version of the openocd-kendryte. You can use the debug-oocd.sh helper script as shown in the maixbit example. GDB isn’t required to follow this article. You can use kflash utility instead as described in the previous article.

Core [0] halted at 0x8000bb4c due to debug interrupt

Core [1] halted at 0x800093ea due to debug interrupt

(gdb) load

Loading section .text, size 0x62230 lma 0x80000000

Loading section .rodata, size 0x2c80f lma 0x80062240

Loading section .typelink, size 0x658 lma 0x8008ec20

Loading section .itablink, size 0x18 lma 0x8008f278

Loading section .gopclntab, size 0x3df15 lma 0x8008f2a0

Loading section .go.buildinfo, size 0x20 lma 0x800cd1b8

Loading section .noptrdata, size 0xf00 lma 0x800cd1d8

Loading section .data, size 0x3f0 lma 0x800ce0d8

Start address 0x80000000, load size 844500

Transfer rate: 64 KB/sec, 14313 bytes/write.

(gdb) c

Continuing.

Program received signal SIGTRAP, Trace/breakpoint trap.

runtime.defaultHandler ()

at /home/michal/embeddedgo/go/src/runtime/tasker_noos_riscv64.s:388

(gdb)

As you can see our program has been stopped in runtime.defaultHandler. This function handles unsupported traps (there are still a lot of them). Let’s see what happened.

(gdb) p $a0/8

$1 = 2

The A0 register contains a value of the mcause CSR saved at the trap entry (multiplied by 8). We can’t rely on the current mcause value because the interrupts are enabled. Bu we can check if it’s the same.

(gdb) p $mcause

$2 = 2

It seems there was no other traps in the meantime. The mcause CSR contains a code indicating the event that caused the trap. In our case it’s Illegal instruction exception. Let’s see what this illegal instruction is. The mepc register (return address from trap) was saved on the stack.

(gdb) x $sp+24

0x800d4820: 0x80062221

As before we can check does it’s the same as the current one.

(gdb) p/x $mepc

$2 = 0x80062220

Almost the same (LSBit is used to store fromThread flag).

(gdb) list *0x80062220

0x80062220 is in main.hartid (/home/michal/embeddedgo/kendryte/devboard/maixbit/examples/multicore/asm.s:9).

4 #define mhartid 0xF14

5 #define s0 8

6

7 // func hartid() int

8 TEXT ·hartid(SB),NOSPLIT|NOFRAME,$0

9 CSRR (mhartid, s0)

10 MOV S0, ret+0(FP)

11 RET

12

13 // func loop(n int)

All clear. Our program runs in the RISC-V user mode. We have no access to the machine mode CSRs. But there is a way to tackle this problem.

func main() {

runtime.LockOSThread()

rtos.SetPrivLevel(0)

for {

print(hartid())

}

}

The rtos.SetPrivLevel function can be used to change the privilege level for the current thread . As it affects the current thread only we must call runtime.LockOSThread first to wire our goroutine to its current thread (no other goroutine will execute in this thread). Now we can run our program.

As you can see our printing thread is locked to hart 0.

Multiple threads

Let’s modify the previous code in a way that allows us to easily alter the number of threads.

package main

import (

"embedded/rtos"

"runtime"

_ "github.com/embeddedgo/kendryte/devboard/maixbit/board/init"

)

type report struct {

tid, hartid int

}

var ch = make(chan report, 3)

func thread(tid int) {

runtime.LockOSThread()

rtos.SetPrivLevel(0)

for {

ch <- report{tid, hartid()}

}

}

func main() {

var lasthart [2]int

for i := range lasthart {

go thread(i)

}

runtime.LockOSThread()

rtos.SetPrivLevel(0)

for r := range ch {

lasthart[r.tid] = r.hartid

print(" ", hartid())

for _, hid := range lasthart {

print(" ", hid)

}

println()

}

}

func hartid() int

Now the main function launches len(lasthart) goroutines and after that prints in a loop the hartid for itself and all other goroutines. Every launched goroutine periodically checks its hartid and sends report to the main goroutine.

Let’s start with main + 2 goroutines.

You can see we have a stable state: the main goroutine runs on hart 1, the reporting goroutines run on hart 0. Let’s add more goroutines.

The beginning looks interesting:

0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 1 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 1 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 1 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 1 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 1 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

It seems that almost all reporting goroutines start on hart 0 but after a while they migrate to hart 1 and stay there. Edit. It doesn’t have to be true because the lasthart array is zero initialized. It should be initialized to something other value, eg: -1.

Remember the goroutine scheduler can’t run more than 2 goroutines at the same time. Our reporting goroutines don’t do much. They spend most of their time sleeping on the full channel. It seems reasonable to gather them all on one P and give the other P for busy main thread.

Let’s increase the number of logical processors by adding runtime.GOMAXPROCS(4) at the beginning of the main function.

It seems the goroutine scheduler cannot reach a stable state. But we can see the hart id only. Can we see also the logical processor id? Yes, we can. Let’s modify the hartid function to return both.

// func hartid() int

TEXT ·hartid(SB),NOSPLIT|NOFRAME,$0

CSRR (mhartid, s0)

MOV 48(g), A0 // g.m

MOV 160(A0), A0 // m.p

MOVW (A0), S1 // p.id

SLL $8, S1

OR S1, S0

MOV S0, ret+0(FP)

RET

The print(" ", hartid()) call has been changed to print(" ", hid>>8, hid&0xFF) to show both numbers next to each other.

As you can see the goroutine scheduler keeps the main goroutine on P=0,1 and reporting goroutines on P=2,3. Our simple rule that maps Ps to CPUs causes threads to jumping between K210 cores.

Ending this article let’s get back to two Ps but let’s give our reporting goroutines something to do. As we’ve already got some practice with Go assembly we will use it to write simple busy loop. Thanks to this we’ll be sure the compiler won’t optimize this code.

// func loop(n int)

TEXT ·loop(SB),NOSPLIT|NOFRAME,$0

MOV n+0(FP), S0

BEQ ZERO, S0, end

ADD $-1, S0

BNE ZERO, S0, -1(PC)

end:

RET

You can find the full code for this last case on github. You can play with other things, like the channel length, the loop count, odd GOMAXPROCS values, etc.

Workload disturbs the stable state from the second example. We can observe quite long periods when all goroutines run on the same logical processor which may be disturbing. Edit: It doesn’t have to be true. We print the whole line after each report received so it takes some lines to print a global change which affects many/all goroutines.

Summary

It’s hard to draw any deeper conclusions from these superficial tests. It wasn’t the purpose of this article. We had some fun with Go, RISC-V assembler, debugger and underlying hardware which is what you can expect from bare-metal programming. It seems the goroutine scheduler and tasker both work in harmony with each other. A more strict approach is needed to draw more definitive conclusions that can be used to improve one or the other.

Michał Derkacz